As robotics technology keeps moving forward, we’re getting closer to the integration of robots into our daily routines. Yet, this also brings challenges, such as making robots understand how to interact with humans, teaching robots to not only see and understand what we’re doing but also responding in real-time to complex social behaviours. Until now, robots have had no standard way of representing the people around them: with engineers developing custom solutions for each and every robot. Here is where ROS4HRI steps in.

The challenge lies in the lack of any standard model for human representation that would facilitate the development and interoperability of social perception components and pipelines. Benchmarking and reproducibility in Human-Robot Interaction can take work to achieve due to the inherent complexity and diversity of human behaviors in real-time, multiparty situations. The absence of a standard and open-source software for human representation complicates this, this can be attributed to the relatively lower maturity of some of the underlying detection and processing algorithms, coupled with the complexity of HRI pipelines. ROS4HRI aims to bridge this gap.

Introducing ROS4HRI: Enhancing benchmarking and reproducibility

To address these challenges, our Senior Scientist in Software Engineering, Dr. Severin Lemaignan worked with the team at PAL Robotics to develop ROS4HRI. ROS4HRI stands for “ROS for Human-Robot Interaction” and is a framework designed to promote interoperability and reusability of core functionality between various HRI-related software tools, from skeleton tracking to face recognition to natural language processing. Crucially, these interfaces are meant to be applicable to a broad range of HRI applications, spanning from high-level crowd simulation to intricate modelling of human kinematics.

Introduced in 2021 and subsequently accepted in 2022 by OSRF, the Open Source Robotics Foundation, as the REP-155 Open ROS specification, ROS4HRI offers a set of conventions and standard interfaces for HRI scenarios. Built on the Robot Operating System (ROS), it fully integrates with the wider ROS ecosystem and is independent of specific robot types.

ROS4HRI could significantly enhance benchmarking and reproducibility by establishing common interfaces that allow direct performance comparison between different algorithms developed within the community. Moreover, it supports sustainable software development, making it platform-agnostic and shareable among different systems.

ROS4HRI layer by layer

ROS4HRI can be visualised as a layered structure, much like the layers of an onion skin. At its core lies the specification itself, serving as the central concept. Surrounding this core is the first layer, represented by a blue ring, comprising crucial open-source software libraries that effectively bring the specification to life. Within this blue ring, you can find essential elements such as ‘hri_msgs’ and a suite of C++/Python libraries, represented by both blue and yellow segments. Beyond these libraries, the system extends further with a green ring, and various tools designed to facilitate the use of ROS4HRI. These tools play a key role in enabling developers and researchers to harness the potential of the framework. Finally, the external layer, represented as a purple ring, features the open-source reference implementation. This set of software modules forms the backbone of ROS4HRI, providing fundamental functionalities, particularly in the realm of human detection. Together, these layers make up the intricate architecture of ROS4HRI, each one contributing to its robustness and versatility.

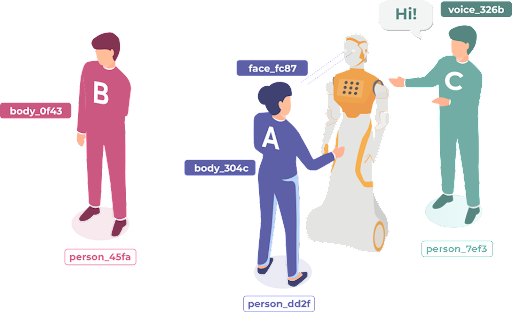

Human and group representation models, including body and face identifier

At the heart of ROS4HRI is a human representation model designed to accommodate existing tools and techniques used to detect and recognise humans. This model relies on four unique identifiers: face, body, voice, and person identifiers. These identifiers are not mutually exclusive and adapt based on the requirements of the application and the available sensing capabilities.

- Face Identifier: This unique ID identifies a detected face, typically generated by the face detector/head pose estimator upon face detection.

- Body Identifier: Similar to the face ID, the body identifier pertains to a person’s body and is created by the skeleton tracker upon detection of a person.

- Voice Identifier: likewise, voice separation algorithms can assign a unique, non-persistent ID for each detected voice.

- Person Identifier: A unique ID permanently associated with a unique person, assigned by a module capable of person identification. This ID remains persistent so that the robot can recognise people across encounters/sessions. Each person identifier is linked to its corresponding face identifier, body identifier, and voice identifier (and those can change over time, when re-detecting the face of the person on a different day).

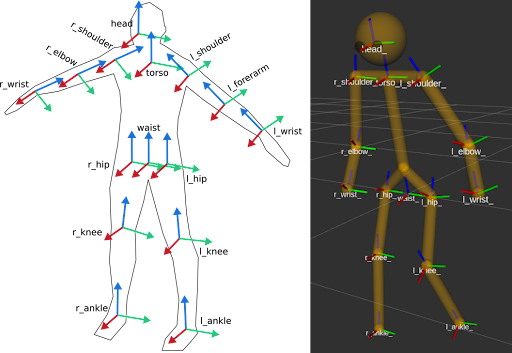

In addition to the human representation model, ROS4HRI employs a ROS-based Human Kinematic Model based on the URDF standard. This model adapts to variations in human anatomy, deriving dimensions based on standard models of anthropometry. The generated URDF model is published on the ROS parameter server and used, along with the joint state of each tracked body part, to generate a real-time 3D model of the person.

In terms of the representation of groups, when detected, group-level interactions are published on a dedicated topic. Each group is defined by a unique group ID, and a list of person IDs.

Visualisation tools, including skeletons 3D

In addition to a standard to describe humans, ROS4HRI also brings several new tools to visualise the humans interacting with the robot, extending ROS RViz and rqt tools.

-

“humans” RViz plugin

“Humans” is a RVviz plugin to visualise human perception features over ROS image streams:

- faces bounding box

- faces landmarks

- bodies bounding box

- 2D skeletons joints.

This tool is part of the hri_rviz package

-

RViz’s Skeletons 3D

Inspired by the existing RobotModel plugin, an rviz plugin to visualise the kinematic model of multiple detected bodies represented through 3D skeletons according to their joint states, position and body orientation. This tool is part of the hri_rviz package as well.

-

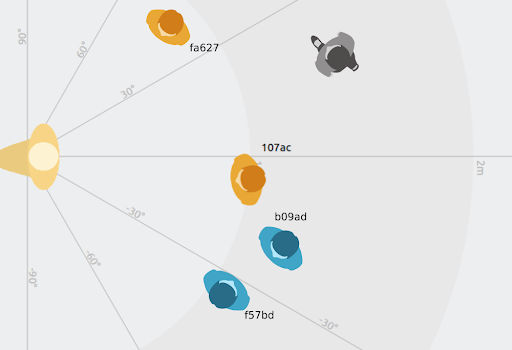

rqt_human_radar

An rqt plugin offering a radar-like view of the robot’s surrounding scene displaying humans according to their position w.r.t. to the robot’s reference frame (e.g., the base link).

To learn more about the software we have developed for our robot ARI, read our blog. To find out more about the social robots ARI, TIAGo and TIAGo Pro visit our website, and get in touch with our team for more information or to ask any questions.