Next up in our SLAM series is intern Tessa Pannen, who is studying a Computational Engineering Science MA at the Technische Universität Berlin. As she’s coming to the end of her six month internship, we quizzed Tessa about the successes and challenges of her new verification process for loop closure and framework for SLAM.

This may be an impossible task, but how would you describe SLAM in less than 50 words?

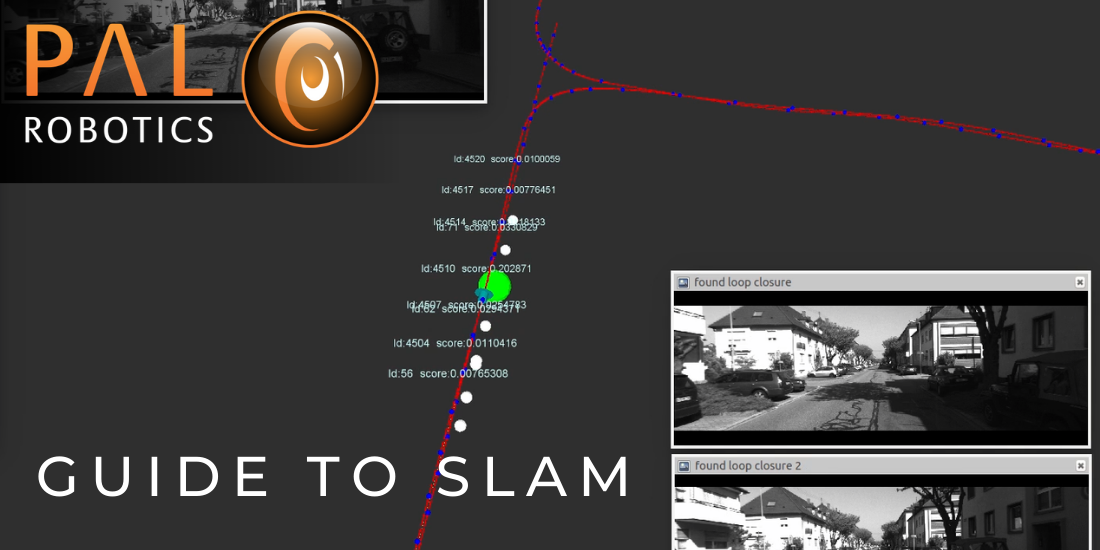

SLAM – simultaneous localisation and mapping – is a technique robots use to record their surroundings using sensors. They are then able to draw a map and estimate their localisation inside that map. Loop closure is needed to validate the robot’s estimated position in the map and correct it if necessary.

Can you tell us a bit more about the loop closure process?

Loop closure is the ability of the system to recognise a place the robot has previously visited. In visual SLAM, we use RGB cameras to collect information and evaluate whether a robot has “seen” a place before.

A camera integrated in th e robot continuously takes pictures of its surroundings and stores them in a database, similar to a memory. Because a robot can’t “see” the way humans do, in order to compare the images, it has to break them down into prominent features such as corners or lines. As their properties can be stored as binaries, these features can be easily compared by an algorithm.

e robot continuously takes pictures of its surroundings and stores them in a database, similar to a memory. Because a robot can’t “see” the way humans do, in order to compare the images, it has to break them down into prominent features such as corners or lines. As their properties can be stored as binaries, these features can be easily compared by an algorithm.

We then extract a limited number of promising candidate images from the database for the loop closure. These candidates are very likely to show the same location as the current image based on the fact they contain a high number of features with the same or similar properties. We can check which is the most likely to show the same location as the one the robot currently “sees” by comparing several geometrical relations between the features of a candidate image and the current image.

Take a look at our video if you’re wondering what loop closure looks like.

How does your work differ from existing research?

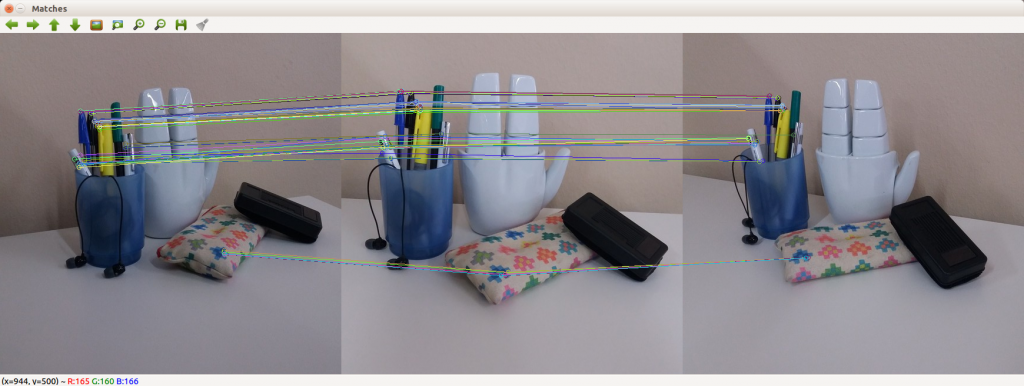

In the first part of my internship, I tried a different approach on the candidate geometrical check by comparing the features of three images instead of two. This basically means adding another dimension to the geometrical relation between the images, so a 2D matrix becomes three dimensional. Part of my research involved finding a stable way to compute this transformation using only the matched feature points in the images.

In the second part of the internship, I joined my supervisor in working on a SLAM framework that we hope will manage all the tasks of SLAM by the time it’s finished, namely mapping, localisation and loop closure. I’d never contributed to such a complex system before, so it was incredibly exciting.

For this task, I implemented several new ways of sourcing a list of loop closure candidates, based on the likelihood of the current and all previous positions instead of feature comparison – a method known as Nearest Neighbor Filter.

What are the next steps in your research?

Tests, tests, and more tests! The only way we’ll know how the new approaches influence the whole loop closure process is by running vigorous tests.

I know you can’t give away too much, but are the results in line with what you expected?

The computation of the transformation works well for simple test images like the one above. We don’t yet know if it’s robust enough to support the loop closure, since we still need to run the tests. It might improve its performance, it might not. But doing research is about trying new things, and there’s no guarantee for success. Keen an eye on the blog – hopefully we will be able to publish another exciting new video!

What have been the highlights so far?

I love the satisfaction you get each time you reach another milestone in your project and see your code slowly developing and actually working. I hope we can make the loop closure run on a real robot by the end of my internship – that’s the big highlight I’m looking forward to.

Visualization of the loop closure process is important in order to find bugs in the code. But when it finally works, it’s hugely exciting to see a simulated robot driving in a loop, highlighting the areas it recognises, and I think: “wow, it’s actually working! I did this!”

What do you think has been the biggest challenge?

Because I wasn’t familiar with any concepts of computer vision, I had to brush up on my maths skills, especially geometry! There was a lot of dry theory I had to absorb in the first few weeks. I also needed to familiarise myself with the existing code of the SLAM project and understand what’s happening in the bigger picture. It can be challenging to dive into a big project without loosing the overview.

How do you think what you’ve learned so far will help in the final year of your degree?

I’ve definitely learned to organise myself better. I now plan what I want to do carefully before I write a single line of code. I also take more notes, which makes it easier to reconstruct my own thoughts and helps me stay on track and not get lost in side tasks. Most importantly, I’ve learned to realise when I need help and ask for it. Sometimes when you’re stuck, you have to swallow your pride and ask for help if you don’t want to loose more time on a problem.

Here’s a video of the loop closure process: