Embodiment of AI in a robot

The use of robots in non-industrial environments, such as healthcare, logistics, business and social gatherings, is set to continue increasing over the coming years, as robotics has the potential to help address some of the challenges we are currently facing as a society, through developments such as healthcare assistance for an ageing population, support at home, and improving processes and competition in business. According to Fortune Business Insights, the global service robotics market size is set to reach USD 41.49 billion by 2027 and the AI in service robotics is destined to play an increasingly greater role.

Robots in industrial environments usually perform set tasks, and the environment tends to be structured with little changes. Non-industrial environments can be complex, dynamic and unpredictable, therefore robots that operate in these environments need more skills – making enhanced AI (Artificial Intelligence) and the many subcategories within AI, including Deep Learning, essential for successful deployment and growth in service robotics. AI involves the simulation of human intelligence processes by computers or robots in order to assist with tasks that are usually done by humans. AI has many applications, including in areas such as security and surveillance, manufacturing, retail, agriculture, and customer support.

Embodiment refers to encompassing AI within a physical body. Embodiment of AI within a robot has been shown by studies to increase its acceptability amongst users, for example positively changing social attitudes such as empathy. In particular, with humanoid robots, this can affect the interactions between a person and a robot, including the aesthetic factors of a robot’s physical design. Several studies have been done to show an embodied design as a necessary element for positive perception of a robot.

If you want to read on the subject, don’t forget to check our blog on robotics research.

Machine Learning and Deep Learning in robotics

Machine Learning is a subcategory of AI in which algorithms are able to learn information without being specifically programmed to do so.

Deep Learning enables robots to put together facts about a situation through sensors or human input before comparing this information to stored data and going on to decide the meaning of the information.

For AI in service robotics, Machine Learning and Deep Learning are able to bring many capabilities which help robots to interact with humans more easily, and understand their environment better. These abilities include:

- facial recognition

- emotion recognition

- people tracking

- enhanced fall detection

- speech recognition

- environment understanding

- object recognition

- object tracking

- control of manipulation of objects in case of uncertainty

Technology corporation, NVIDIA, develops GPU-based Deep Learning in order to use AI to improve everyday life through areas such as disease detection, weather prediction, and self-driving vehicles. NVIDIA designs graphics processing units (GPUs) for gaming and professional markets, as well as systems on chip units (SoCs) for mobile computing and automotive markets.

NVIDIA Jetson is one of a number of applications that enable AI in service robotics. At PAL Robotics, we work with NVIDIA® Jetson™ TX2 which provides speed and power-efficiency in an embedded AI computing device. The NVIDIA® Jetson™ TX2 is one of the fastest and most power-efficient and compact computing devices in the market, opening the door to a whole new world of possibilities that are born from the AI and robotics synergy.

Applications with PAL Robotics’ robots

For AI in service robotics, at PAL Robotics as well as equipping our robots with NVIDIA Jetson GPUs, we are continuously developing various AI applications in our robots, some of which are part of EU research projects to enable new use cases in robotics. Here are some examples:

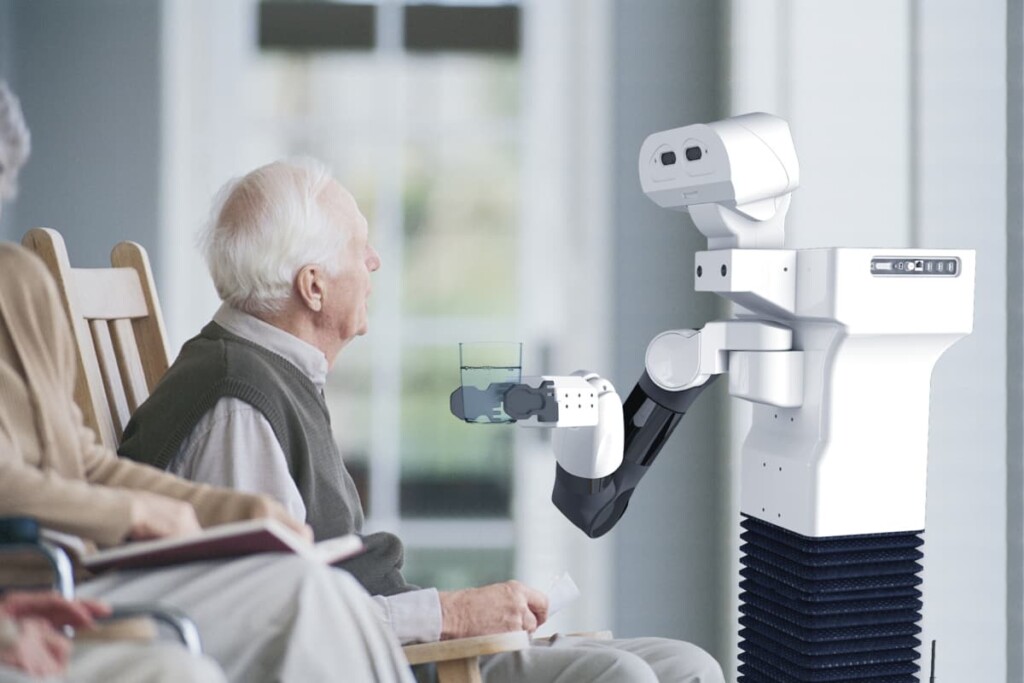

Object Detection for robot mobile manipulator robot TIAGo

Combining the TIAGo mobile manipulator robot with the NVIDIA Jetson GPU gives the robot enough computing power to integrate new kinds of Artificial Intelligence applications and Machine Learning Developments, it’s also convenient for embodying AI algorithms, without depending on any network connection. Furthermore, with the NVIDIA Jetson TX2 we provide a Google Tensorflow Object Detection system.

We have worked to provide the TIAGo robot with the perceptual competencies that are necessary to localize itself in the environment, detect objects, other robots and humans and interact with them. Each robot performs these tasks autonomously and uses its own sensors.

An example of object detection with TIAGo is within the EU Collaborative project Open DR. The main objective of this healthcare project is to build a versatile robotic assistant using the TIAGo mobile manipulator robot that can help with the wide range of tasks a patient needs to perform in their daily life. In the Open DR project, TIAGo serves as an end-user assistant by receiving visitors and taking and delivering objects to them in a medical setting. To do all this, TIAGo needs to have incorporated human presence recognition, activities and vocal instructions, object detention, and detection of emotional states to enable a person-centric human interaction.

Fisheye camera integration on social robot ARI

Social humanoid robot ARI is an artificially intelligent-powered robot, which integrates the NVIDIA Jetson TX2 GPU and also the i7 IntelCore. ARI enables easy integration of AI algorithms thanks to the robot’s unrivalled processing power.

As an example, as part of EU Collaborative project SPRING we have integrated RGB fisheye cameras into robot ARI to enhance the robot’s field of vision. Project SPRING is working on the adaptation of Socially Assistive Robots including ARI for a hospital environment. The main goal is for the robot to perform multi-user interaction in different user-cases in hospitals, such as welcoming newcomers to the waiting room, helping with check-in/out forms, providing information about the consultation agenda, and acting as a guide to appointments.

For project SPRING the updates on ARI includes tests with integration of NVIDIA Jetson and fisheye cameras. Wide-angle, omnidirectional and fisheye cameras are popular in robotics tasks including navigation, localization, tracking, and mapping nowadays. In the SPRING project the RGB fisheye cameras will be used to detect and recognize people and objects, for improved interaction in the hospital use cases.

The ARI robots used in this project include 2x 180º RGB fisheye cameras, ReSpeaker Mic v2.0 array, and NVIDIA Jetson TX2. Following the integration for this project, RGB fisheye cameras are now available as an optional add-on with social robot ARI.

Adding a thermal camera to robot ARI

As part of the EU collaborative project SHAPES “Smart and Healthy Ageing through People Engaging in supportive Systems” digital solutions from SHAPES project partners are being integrated into ARI for enhanced capabilities to operate as a home robot, such as fall detection and video calls. Modifications to ARI include the adding of a thermal camera. At PAL Robotics we have been working to develop the thermal camera format and have integrated the camera into ARI’s head.

In the project pilot, the thermal camera will serve the robot to monitor the temperature of the user at home at given times of the day, as well as measure the temperature of any visitors to help detect COVID-19, in order to do this successfully, facial recognition is necessary. In another of the upcoming pilots, where ARI will be playing cognitive games as part of a group, it is planned to use the camera to facilitate the caregiver to monitor the residents’ temperatures.

Many of our research customers have also added their own Machine Learning and Deep Learning integrations working with our robots. The value of AI in service robotics goes beyond giving a robot stronger and more sophisticated interaction abilities. AI-powered robots are actually able to embody their AI-based decisions and act in the physical world, opening the door to a wider range of robotics applications.

To read more about PAL Robotics service robots and their capabilities, visit our website. To ask more about the robotic solution that may be right for you, don’t hesitate to get in touch with us.