Collaborative robotics and human-robot interaction are becoming ever more important in the adoption of robots nowadays. There is a demand for intuitive solutions that users can easily program and deploy in their everyday lives; in particular, solutions that are suitable for non-robotics experts. In addition, at PAL Robotics we are focused on constantly developing our platforms to better fit the needs of users. Based on user needs and feedback from the social robotics use cases we participate in, we have been working on a number of developments to ARI the state-of-the-art social robot: here we take the opportunity to tell you more!

Collaborative robots have numerous applications in many different types of industries. Social robots, in particular, are used in areas including education, healthcare, assisted living and events. Within these settings, some examples of deployment for social robots include as assistants, receptionists, entertainers, and brand ambassadors.

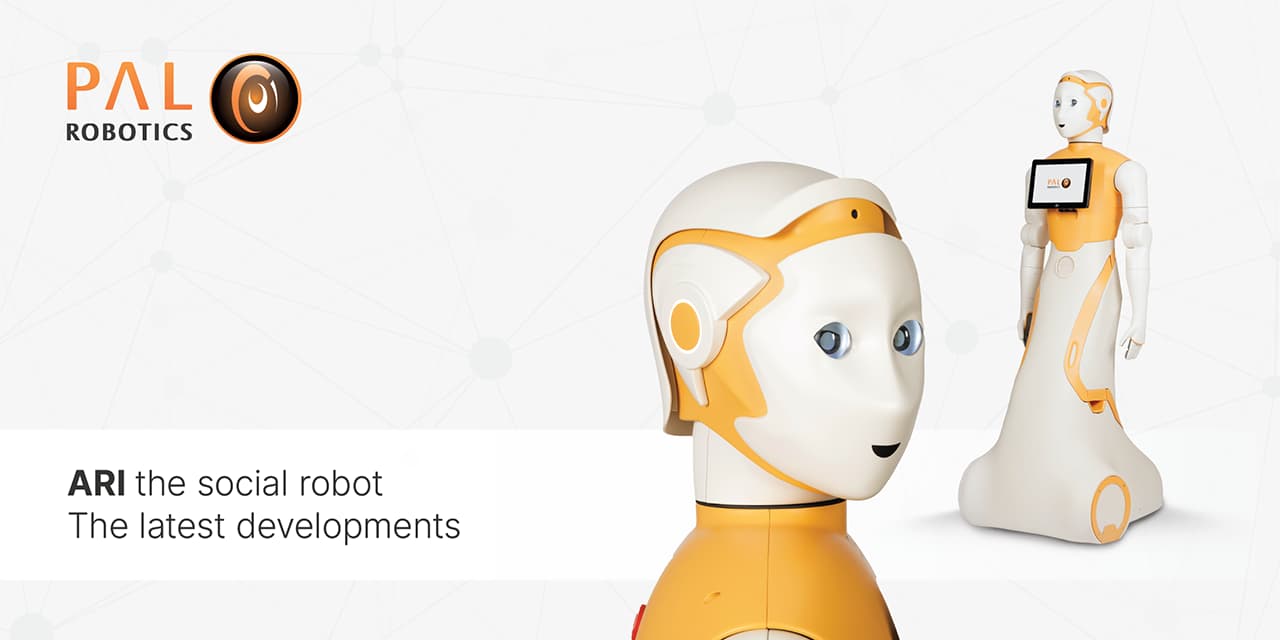

ARI is our latest humanoid-like platform for research that is specifically designed to be an interactive and engaging social robot. The main application of the robot is to welcome, meet, support, or even entertain its audience while serving as a dynamic point of information.

Safe and autonomous, ARI’s design, expressiveness, and robust hardware also make it an ideal platform for research in social robotics and Human-Robot Interaction. Our social robot ARI is also fully ROS-based which makes the platform even easier to test and deploy your own developments on. You can do that even if you do not have the platform available – find everything you need on ARI simulation at the ROS wiki article, furthermore, you can also check the excellent learning material created by our partners, The Construct.

ARI’s key features for social interaction: from customisable chatbot to expressive eyes

Here are some of the examples of the ARI features you can rely on to boost human-robot interactions:

- Expressive interactions – including animated eyes with a library of expressions

- Agile motions – along with a graphical interface to create motions

- Reason on semantic knowledge – with an integrated RDF knowledge base

- Speech & understanding – on-board speech recognition and synthesis and a customisable chatbot

- Social perception – detect and recognize users, track in real-time 2D and 3D skeletons

- Navigation in human environments – with an easy-to-use interface to create points of interest

ARI’s recent hardware developments: redesign of the touchscreen integration and new degrees of freedom within the arms

ARI the social robot’s hardware design was inspired to make the robot engaging and expressive. PAL Robotics closely follows market trends, developing our products with the collaboration of users. The highlights of recent developments on ARI have included a focus on:

- The arms

- The touchscreen

Recently, the arms and the neck of the robot have been redesigned, removing pinching points without compromising the movements of the arms. In addition, we have added one additional Degree of Freedom (DoF) on each arm: ARI has wrists and each arm has a total of 4 DoF. This enables ARI to be more expressive and engaging when interacting with users.

Another of our recent hardware updates concerns the touchscreen. It has been modified and is now mounted outside the covers on the torso for improved ergonomics, while also offering the possibility of installing additional sensors at torso height (such as a thermal camera, an additional RGB or RGB-D camera).

ARI the social robot’s GPU upgrades and LIDAR sensors

ARI is an advanced platform for in-depth studies, such as human-machine interaction, cognitive computing, autonomous navigation, and programing via ROS. Now, following recent developments, it’s possible to equip our research robot ARI with LIDAR sensors, giving the platform autonomous navigation capabilities which equip the robot for a number of potential new use cases.

- We have also expanded the upgrade options for CPUs and GPUs. ARI can now be configured with up to: an Intel i9 CPU, 32Gb RAM and a 1TB SSD.

There are also several powerful GPU upgrade possibilities available with the latest embedded GPU from NVidia (NVIDIA Xavier or the recently released NVIDIA Orin).

ARI’s Human-robot interaction capabilities for social perception and social behaviours

As a platform, the social robot ARI features multiple capabilities for social perception and to foster social interactions, some of which have always been part of the platform, and others are more recent developments. Here is an overview of some of these capabilities:

- Face detection

- Face recognition

- 3D head pose and gaze tracking

- 2D body tracking

- 3D body tracking

- ROS-compatible 3D human model

- Active user tracking

- Multi-modal fusion

- Engagement detection

- Speech recognition (ASR)

- Dialogue management

- Multi-modal speech

- Saliency-driven attention

- Expressive gestures

- Semantic reasoner

ARI the social robot’s use cases in education, hospitals and care homes

The social robot ARI takes part in a number of use cases in different environments, all with the aim of helping users more and more in their daily lives. In addition, use cases allow us to be able to constantly develop the solution to meet their needs. Here are some recent examples:

Project PRO-CARED: interactions with children in education

Project PRO-CARED involves designing mechanisms for personal robots to proactively interact with children, providing adaptive and personalised assistance in an educational context. This includes pilots in schools with a small number of children, with children interacting with the robot for several sessions, and the robot acting as a tutor for teaching and supporting skills.

Project TALBOT: supporting users with daily tasks in hospitals

In the TALBOT project, we are developing cognitive architecture for humanoid robot ARI and validating the proposed technology in healthcare applicative scenarios, in particular in a day hospital for users of diverse ages. ARI will be embedded in the hospital and able to model and engage people in a range of daily tasks. Example tasks include giving patient reminders and information, enabling video calls to friends and family, and general daily assistance.

Project NHoA: assisting and monitoring patients in care homes and homes

The general objective of project NHoA is the co-design of a socially intelligent assistive robot that is robust, adaptable, and encourages patient acceptance and engagement. The robot will aid in everyday life with tasks including monitoring the environment, safety, general assistance, entertainment, remote health monitoring, and encouraging users to do physical exercise and cognitive training.

To read more about PAL Robotics’ social humanoid robot ARI and the robot’s capabilities in HRI and AI read some of our previous blogs here:

In our webinar ‘Boosting interactions with ARI, we will introduce you to the social humanoid robot’s features and demonstrate the robot’s capabilities through live demos, the webinar will also include a Q&A session. Book your place today.

Remember to check our social media channels regularly to hear more about our latest news and research on ARI, and to ask more about how the platform may be a fit in your organisation or for your research, don’t hesitate to get in touch with us.