TIAGo Robot and Deep Learning

The combination of robotics and Deep Learning can lead robots to be more intelligent than ever before. A flurry of research is helping robots understand their surroundings and make decisions on their own. The UPC student Sai Kishor Kothakota, who is doing his Master in Automatic Control and Robotics, is doing an internship at PAL Robotics and using Deep Learning with one of our robot TIAGo, in which we have installed an NVIDIA Jetson – one of the robot’s optional features. Here’s our conversation with him:

In your own words, what is Deep Learning?

Deep Learning is a subfield of machine learning, which deals with the techniques that teach computers to do tasks that humans do naturally. These methods are basically inspired by the structure and function of the brain called artificial neural networks. Deep Learning is already being used in various fields of industries such as automated driving, aerospace, medical research, industrial automation and electronics, and outperforms the state-of-the-art approaches in traditional machine learning problems.

Why is TIAGo suitable for doing research with Deep Learning?

TIAGo being a mobile manipulator is surely a versatile platform for this research application. With the capabilities of TIAGo combined with those of deep learning, one can easily command it to perform the desired task. TIAGo has been a part of social robotics for many years, and this multifaceted application will change the way of interaction between the robot and humans, bringing more generalization in the task performance.

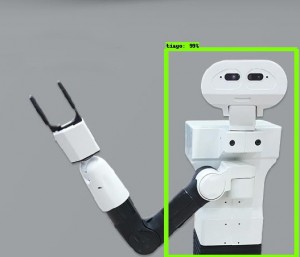

TIAGo has even learned to recognize itself!

What is your primary focus in your project with TIAGo?

I am mainly focusing on object and speech recognition. With Speech recognition, the user can command TIAGo in his natural spoken words. Contextualizing the audio into text or machine-readable format helps the robot to better interpret what the human is conveying in the spoken language. Later on, this can be used for TIAGo to build conversations, tell some jokes, search for content or playing a song according to the situation.

Using object recognition, TIAGo is able to detect the items around it. The robot can easily differentiate between similar looking objects and know what the user wants. The process of building object recognition models is something similar to how humans learn right from their childhood.

Could you tell us more about this?

For instance, a toddler learns about a cat by pointing to different objects and saying the word “cat”. The parent says: “Yes, that is a cat,” or: “No, that is not a cat.” As the toddler continues to point objects, he becomes more aware of the features that all cats possess. What happens is that the brain’s level of abstraction is getting more complex and is building a hierarchy to clearly know the object – the same happens while using Deep Learning with robots.

In summary, do you believe robots and Deep Learning have a bright future together?

Yes indeed, with this kind of abilities robots will be able to perform tasks in a more flexile manner. In simple words, deep learning makes the robot respond more intellectually to different scenarios. This is surely a good start for the future of intelligent species!

Thank you for sharing your research with us, Sai! If you would like to learn more about the possibility of integrating NVIDIA Jetson to TIAGo robot, visit the contact section in our website or take a glance at our blog to know more about our robots and research.