SLAM – simultaneous localisation and mapping – is a technique robots use to build a map of their environment, ascertain where they are within the map and assess whether they’ve been to a particular location before. It’s an incredibly important technique within navigation research, so important in fact that we currently have a team focusing on the topic.

We’ve interviewed two PAL Robotics interns, who are spending their six month placements helping to develop and test new approaches to SLAM. First up is Robotics Masters student from University of Genoa (Italy), Elena Rampone, who has kindly volunteered to answer our questions and explain how her research into loop closure and place recognition may help a robot recognise its environment.

This may be an impossible task, but how would you describe SLAM in less than 50 words?

A robot navigating in an environment needs to keep track of its movements by estimating its current position, building an internal map of the environment and recognising if it has already visited a particular place. This is SLAM. The estimations are performed by analysing data acquired by the robot’s sensors.

What exactly are you working on?

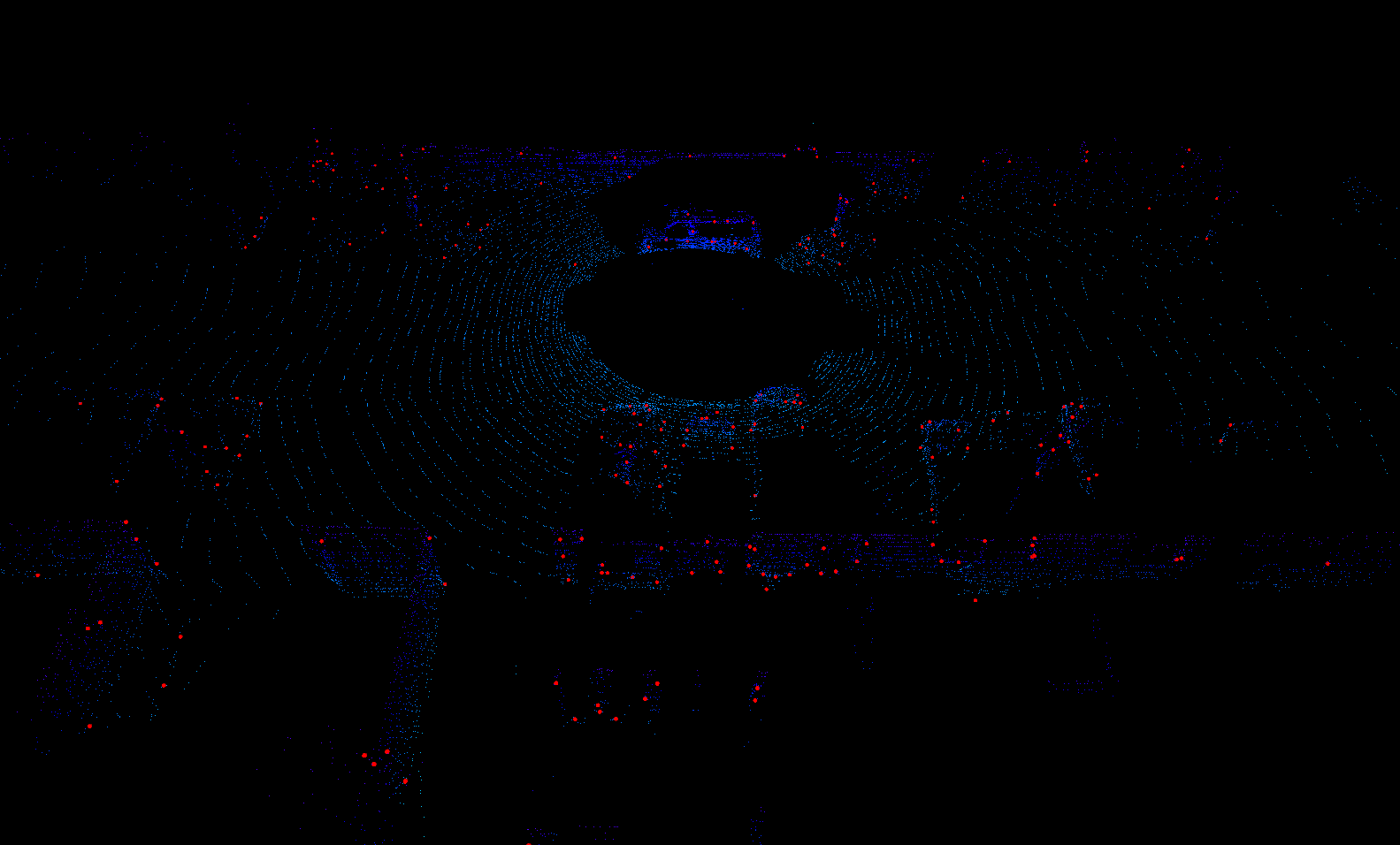

My project can be broken down into two steps. The first consists of adapting the company’s place-recognition framework, based on the Bag of Words algorithm, to 3D point clouds. Point clouds provide a 3D depiction of the world and are characterised by a compact descriptor, which allows us to efficiently compare them.

The whole system is ROS-based and tested using the Kitti dataset, a widely-used outdoor dataset of point clouds acquired by a Velodyne sensor.

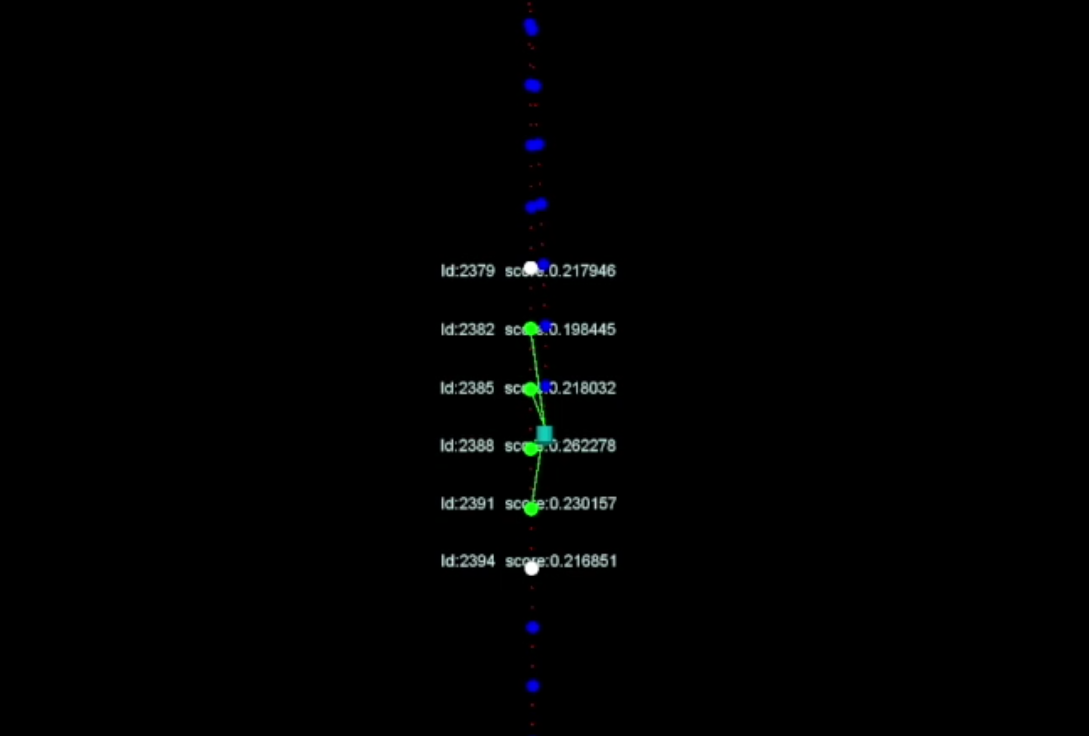

When the robot moves, the descriptors characterising new observations of the environment are inserted into a database that stores information about all the places the robot is visiting. The system continuously checks if a new observed cloud is similar to an antecedent one by comparing its descriptor with those present in the database and then selecting the corresponding clouds. [Image: loop closure]

After the system selects the candidate clouds, it is necessary to assert whether the robot has returned to a previously visited location and, if so, estimate the relative transformation between the two clouds in order to update the position of the robot (loop closure). The second step involves using the Iterative Closest Point (ICP) algorithm – a method that iteratively matches points between two clouds in order to find the relative transformation that minimizes an error measure. Any candidate clouds that correctly converge with ICP are selected as correct loop closures. The estimated pose will then be used to update the robot’s position. What are the next steps in your research? I’ve already been interning at PAL Robotics for five months, so I’m coming to the end of my project. For the final few weeks I plan to keep testing system performance by using different methods to extract point cloud features, in order to identify the one that works best. I’m testing both the place-recognition and loop closure elements to ensure the end result is robust and – most importantly – useful for the PAL Robotics team. I know you can’t give too much away, but are the results in line with what you expected? With the help of open source libraries, I could come to a suitable trade-off between recognition accuracy and execution speed by comparing many different configurations in a semi-automated fashion. The results will allow me to deploy the solution on a TIAGo robot for real-world testing. What have been the highlights so far? My first major highlight was when I tested the ICP algorithm on a sequence of clouds and saw that it was correctly estimating the relative displacement between them and could also align them. But the most important moment was when the place-recognition system started working, as it’s a huge milestone in my internship.