Artificial tactile perception in robots: why is it important?

Robots are advancing all the time, from walking and talking, to seeing and hearing, and having the ability to manipulate objects. Developing a sense of touch though is something that presents more of a challenge, but brings numerous benefits. Adding the sense of touch to the robot’s gripper for example would remove uncertainties in dealing with soft, fragile and deformable objects.

Studying how touch perception works in humans and animals is especially important in order to develop artificial touch perception systems for robots and hand prosthesis.

EU project NeuTouch, that PAL Robotics is a partner in, is training 15 PhD students on cutting-edge touch related research – ranging from biology and computational neuroscience to artificial systems design and robotics, and we are very lucky to have one trainee, Luca Lach, working with us at PAL Robotics.

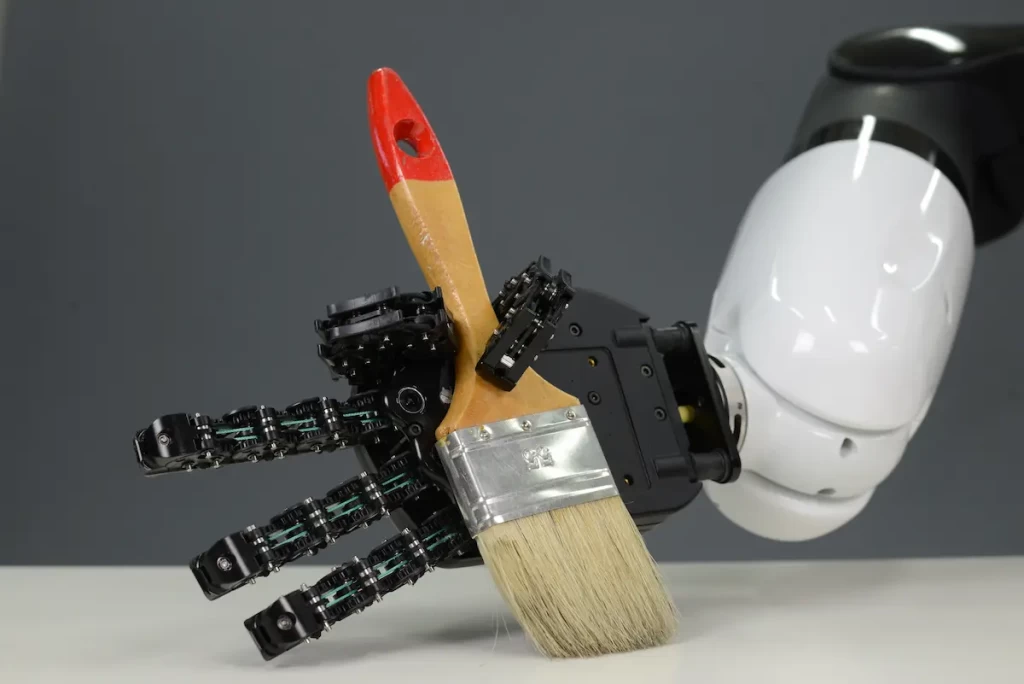

Dexterous manipulation is a fundamental skill to improve interaction capabilities of service and industrial robots, and develop prosthetic devices that enable amputees to regain hand functionality, for improving their independence and quality of life and for reintegration in working environments.

The sense of touch is crucial for any skilled manipulation; it is fundamental to establish contact and acquire the information needed for hand/object interactions, and for perceiving variations in the contact itself (slip, vibrations, duration, pressure).

Touch is also crucial for robots that physically interact with objects and humans, to sense the properties of objects, learn how to use them, and enable cooperation. Sense of touch is necessary for the successful deployment of prosthetic devices, to evoke a natural sensation of contact that conveys information about the stimulus, making prostheses easier to use and accept.

NeuTouch aims at improving artificial tactile perception in robots and prostheses, by understanding how to best extract, integrate and exploit tactile information at system level.

New touch sensor-enabled end-effectors for TIAGo robot

PAL Robotics is taking part in the project to implement and use strain gauge sensors as fingers on the gripper on robots, and in this case, is working with the TIAGo. The advantage of this sensor is that it outputs one value per finger (how high the force is) and works easily in classic algorithms. Our research-focused robot TIAGo can be equipped with different kinds of end-effectors, such as two-finger parallel grippers or the HEY5 hand. Newly developed end-effectors with touch sensors can be tested quickly, allowing us to explore different options before settling on one final product. The main advantage of touch sensors is that the robot knows if it has grasped something successfully and whether it needs to adjust its grip. Both are possible with these simple sensors.

Three major scenarios are planned for evaluating the tactile sensing in the project:

- A collaborative experiment with a touch-based grasping algorithm on different robot platforms.

- Repetition of TIAGo with sensor matrix prototype gripper

- Grasping scenarios in simulation environments where Artificial Intelligences (AI) can be trained. Here we want to investigate the extent to which touch sensors enhance the success rate of machine learning.

The team is working towards different Artificial Intelligence approaches to be used in the project, two of them being (Deep) Reinforcement Learning and Grey Box Learning.

Advantages and what we still need to learn about the (Deep) Reinforcement Learning method

With the (Deep) Reinforcement Learning method, a computer learns tasks by itself through trial and errors, and it normally takes many tries to learn to complete the task properly. The biggest challenge with this method is whether the learnt tasks can be easily transferred from a simulator to the real world, but results from this method are yet to come.

How can the Grey Box Learning method help in this project?

This method uses a different approach to allow the computer to learn the tasks. Instead of letting the computer learn the task on its own, collected information is provided about what the computer should learn. The advantage of this method is that the learned Artificial Intelligence is easier to interpret for us humans.

These two methods are still to be applied.

Machine learning and touch sensors: current achievements within the project

We interviewed our colleague and project participant in NeuTouch, Luca Lach to give us a better insight into this edge-cutting European project.

But first let’s learn more about our colleague Luca. Luca got his Bachelor’s degree in Cognitive Computer Science in 2017 followed by a Master’s degree in Intelligent Systems in 2019 at the University of Bielefeld. Back in 2016 he attended RoboCup@Home, and with his team came back home as winners of the championship. After his graduation Luca started teaching other students how to program robots and later worked as a research assistant with a PhD student on a humanoid robot.. Towards the end of 2019, Luca joined the PAL Robotics team.

Luca, can you tell us about the main accomplishments since the beginning of the project?

Luca told us, “at this point, we have already initiated collaborations with two institutions within this project: Bielefeld University and IIT. I have completed a secondment at Bielefeld University, where I got acquainted with touch sensors. I started developing a touch-based grasping algorithm together with another student from the project, where I focussed on the generic, platform-independent part and my colleague developed the platform-specific parts for their Shadow Hand. The goal is to use the touch-based grasping algorithm on different platforms: the Shadow Hand (Bielefeld), iCub (IIT) and TIAGo (PAL). We started designing the first prototype of a two-fingered gripper for TIAGo and the parts, sensors and read-out electronics are currently in production stage.”

What are the next steps for PAL Robotics in this project?

Luca continued, “the aforementioned gripper needs to be assembled and integrated in TIAGo. This is the most important task at the moment. Afterwards we will start with the grasping experiments on the PAL Robotics side and continue to disseminate the results through scientific papers.

We are working to develop a second gripper that has a sensor matrix instead of a single sensor. These matrices have the advantage of providing more information about an object’s shape and texture.

The goal for the beginning of the next year is to start the transition from hand-written control algorithms to machine learning techniques and AI. One of our aims is to leverage the power of AI to enhance the TIAGo’s abilities in complex scenarios such as grasping. Therefore, we need to model TIAGo with touch sensors in a reliable and fast simulation environment. The most important thing here is to ensure that an AI trained in simulation can also be applied to real-world situations.”

If this type of topic is interesting to you, don’t hesitate to get in touch for possible collaborations. As you may already know, at PAL Robotics we work on many cutting-edge projects and are always on the lookout for new potential partners that want to make a difference by applying AI and robotics.

For more articles on robotics, check out the blog of PAL Robotics.